Ambient Light TV with a Raspberry Pi and a Webcam (Part 2)

In this post I'll go into more details about how I built the ambient light rig. Here is the previous part in case you missed it. I'm not the first one to make one of these, so this is not going to be a perfect instructable. Instead, I'll go deeper into the things I did differently (like the camera), and link to the resources I used for all the other stuff. Here we go!

Most rigs out there use an Arduino linked to a pc, which has the benefit of being relatively easy to implement but the downside is that you can only use it with a video stream directly from the pc's graphics card (for most people, this is acceptable). You can buy ready-made systems that work in a similar way: LiveLight.

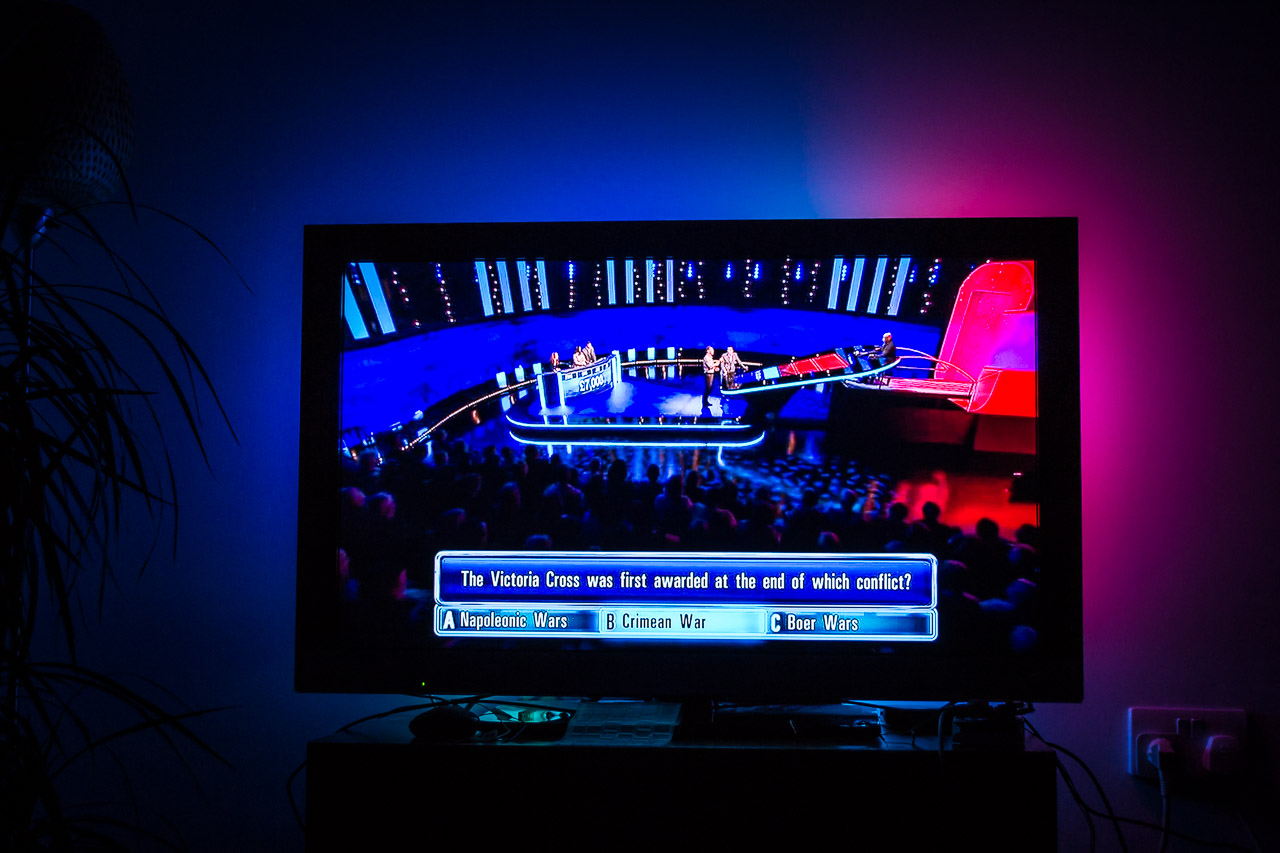

But for myself, I wanted every feed to work: PC, Cable TV, Apple TV and my gaming consoles.

For this to work, I would need access to all the feeds going into my TV. Intercepting a HDMI feed before it goes into the TV is technically possible, but the gear I needed would have been way too expensive. So the only other cheap alternative was to use a webcam, knowing that frame rate, lag and color inaccuracies were going to be an issue.

The camera is best placed pointing directly at the screen to avoid glare and viewing angle color inaccuracies. In my case, I only had two viable options:

As you see in the second picture, I had to close the curtains because at that angle the TV reflects everything from the window adjacent to it. This wasn't a big problem since I prefer to close the curtains when watching a movie or playing games anyway.

Pure blacks tend to be more blueish too, but I can live with that. This actually has a nice effect when the screen goes dark, as the whole rig glows blue providing some light in the room.

For the webcam, I used a Logitech C250 (because I already owned one). It can capture at different resolutions and has a manual focus ring. The manual focus ring is great, because you can intentionally set it to out of focuse to get color averaging for free.

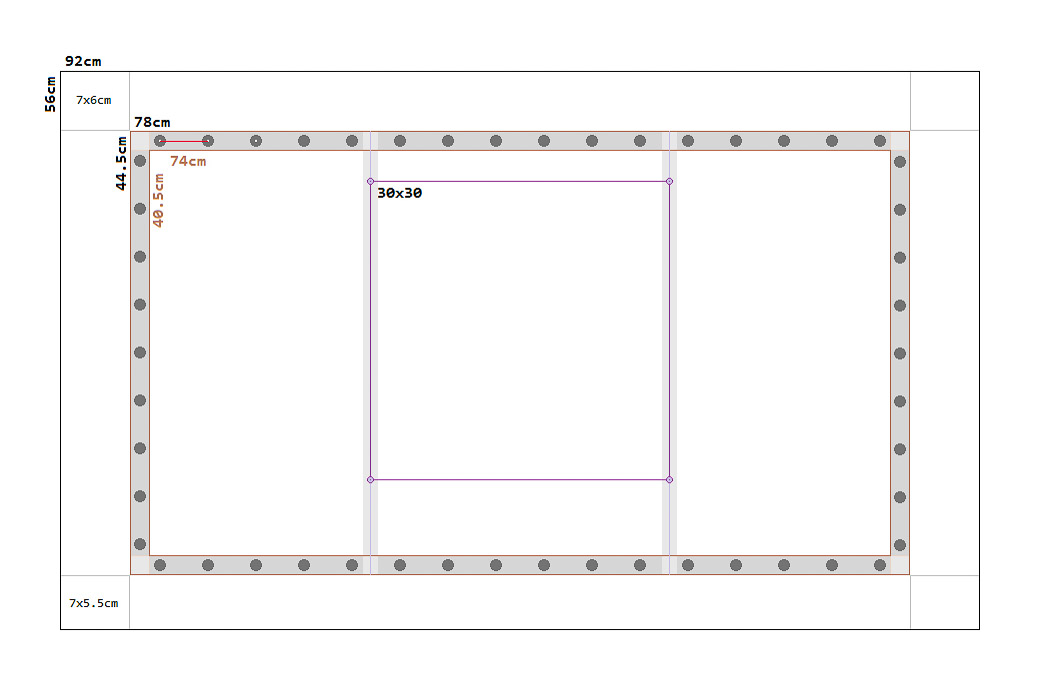

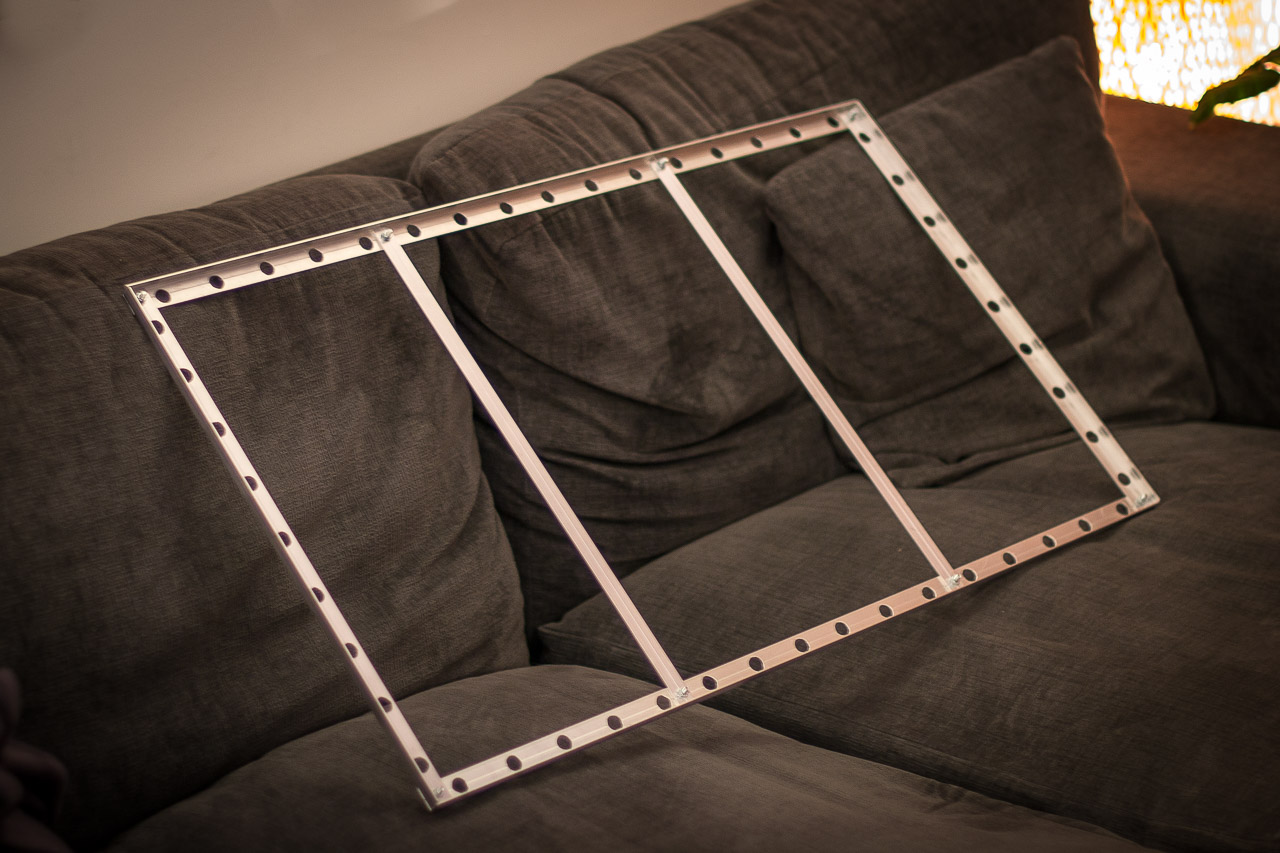

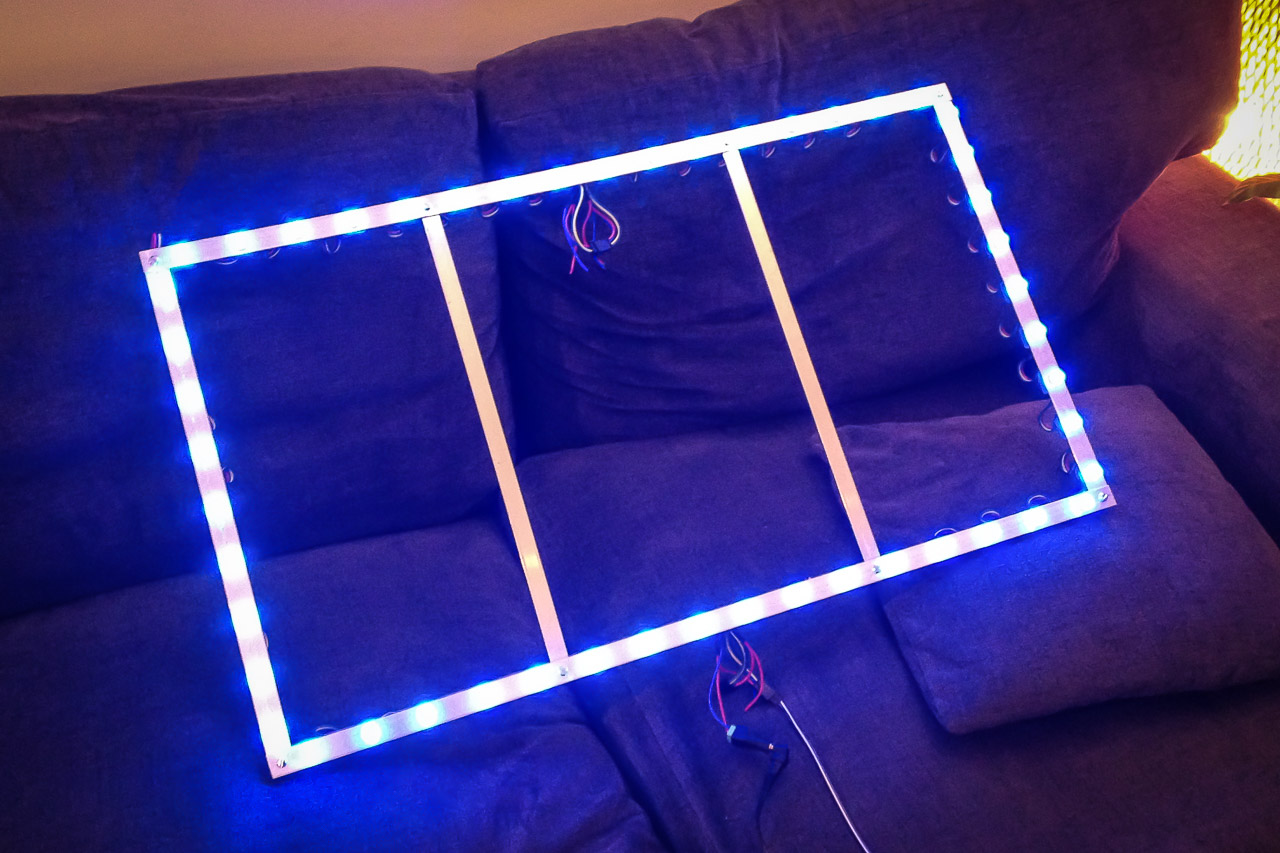

The Adalight tutorial was a good guide for building the frame. This is what I used:

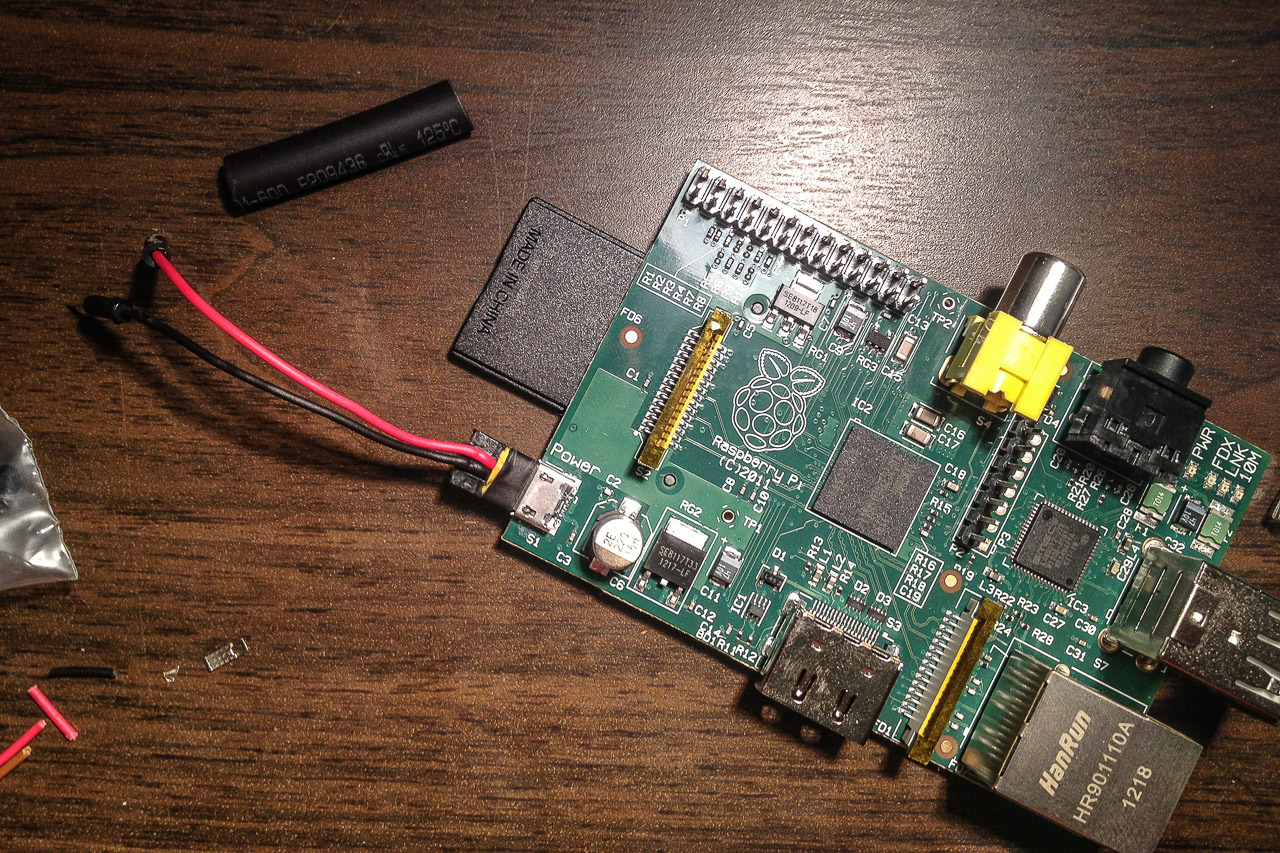

- 1x Raspberry Pi (any version works)

- 2x 12mm Diffused Flat Digital RGB LED Pixels (Strand of 25)

- 1x Stontronics 5V DC 4A Power Supply Unit T2502ST (Powers both the LEDs and the Pi)

- 1x 100x75x40mm Housing for the Pi

- 1x 2.1mm Power Socket (Rated 5A)

- 1x Rocker Switch

- 1x Micro USB

- Some grommets

- 4x Angled aluminium profiles

- Small nuts and bolts to attach the profiles

The following gallery shows my process making the frame.

At this point, I started to work on the server code for the Raspberry Pi. The first implementation was a Python program running in Debian linux LXDE gui mode. This was a failed approach for two reasons:

- The GUI mode was using too many resources on the Pi, so the experience would be quite sluggish

- The Python GPIO library (wiringPi) I was using wasn't fast enough to update 50LEDs.

So I decided to go with a custom C implementation using the wiringPi C++ library. I wrote all the code on my pc and synced it to the pi using rsync. The source code for the server is hosted on github. I'm working on a readme, but the code should be pretty comprehensive if you're familiar with C++.

I used OpenCV to capture frames from the webcam, jansson as a JSON library for saving settings, Log4CPlus as a logging library, and Boost for network communication with a client program running on my PC.

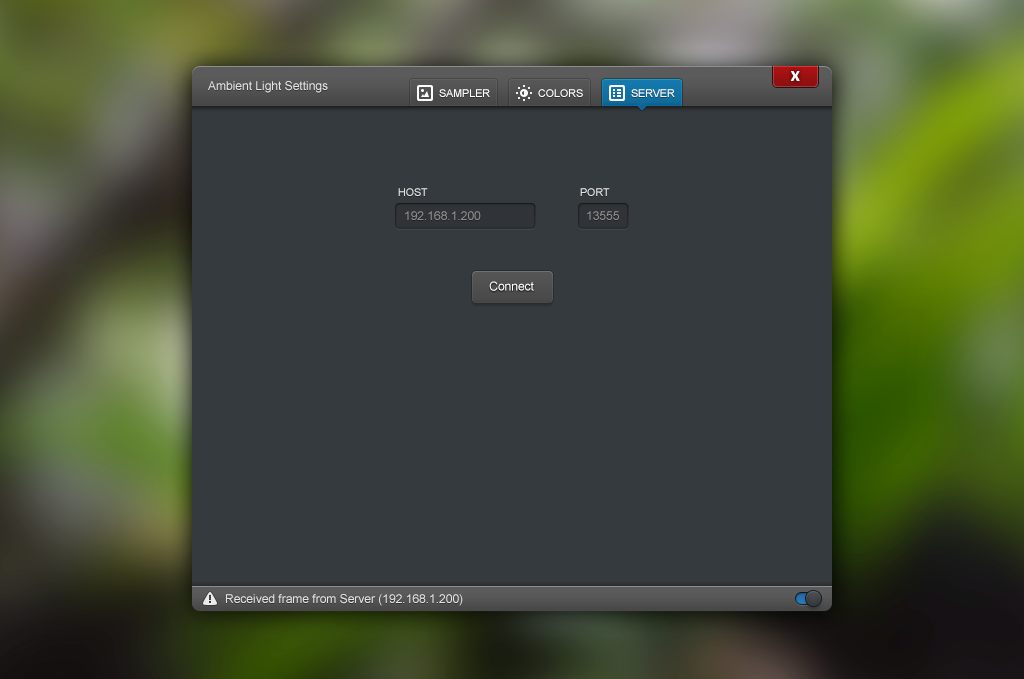

The client program is used for tweaking camera color settings and to set the capture bounds of the frame. It communicates directly with the server on the raspberry Pi. I'll go into more details of the client app in Part3. Here's how it looks:

At this point I had everything pretty much working, and the main task now was to clean everything up and attach the Pi to the frame.

Capturing at full 640x480 resolution was really slow, I expect this is due to the limited USB bandwidth on the Pi. So I had to go all the way down to the lowest capture size: 160x120. This gives me a relatively stable capture framerate of 20FPS.

The LED frame is lagging around 2 frames behind the TV. This is noticeable, but I've found that you eventually get used to it. Blending previous frames together with the current one also helps a lot for smoother color transitions on the LEDs.

The other reason for the slow framerate is the Auto Exposure setting on the webcam. Basically, when the image on screen is dark, the webcam takes longer to capture the frame (longer exposure). The driver I'm currently using on the Pi (using Debian Linux) doesn't allow me to set this to a fixed exposure. I'm still trying alternatives and will post once I have a solution.

All in all, I'm pretty pleased with the results. If I manage to turn off Auto Exposure, I think I might be able to hit 30FPS. (Edit: Confirmed! I managed to set a fixed Exposure setting, and I'm getting a smooth 30fps now.)

I'm eventually going to write Part3, which is more about the client. All the code is on github, so don't wait up.

If you're interested in building one of these, here are some resources I used to get me started: